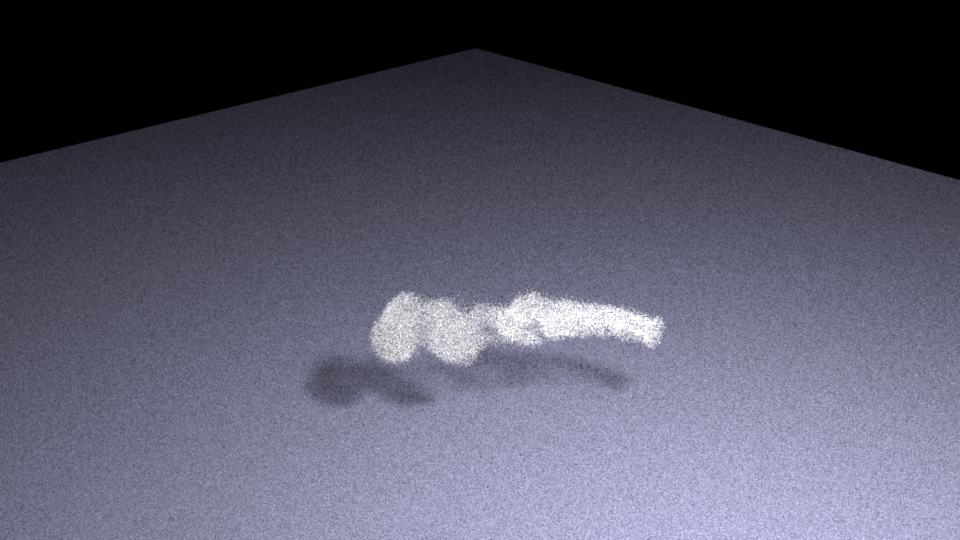

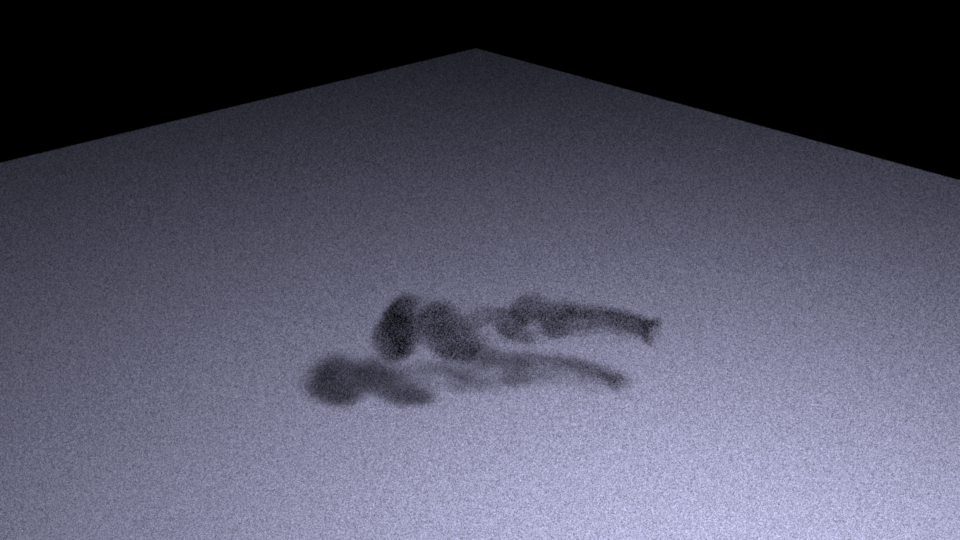

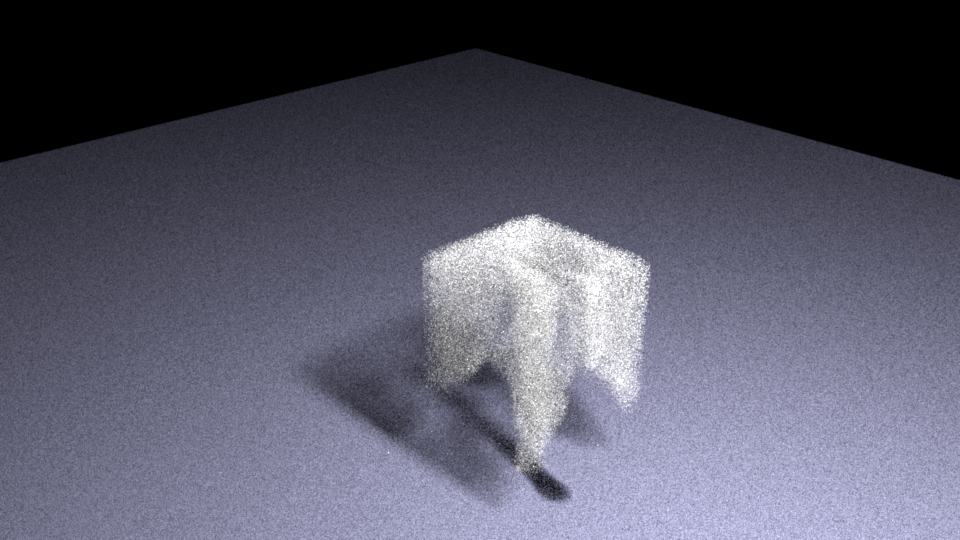

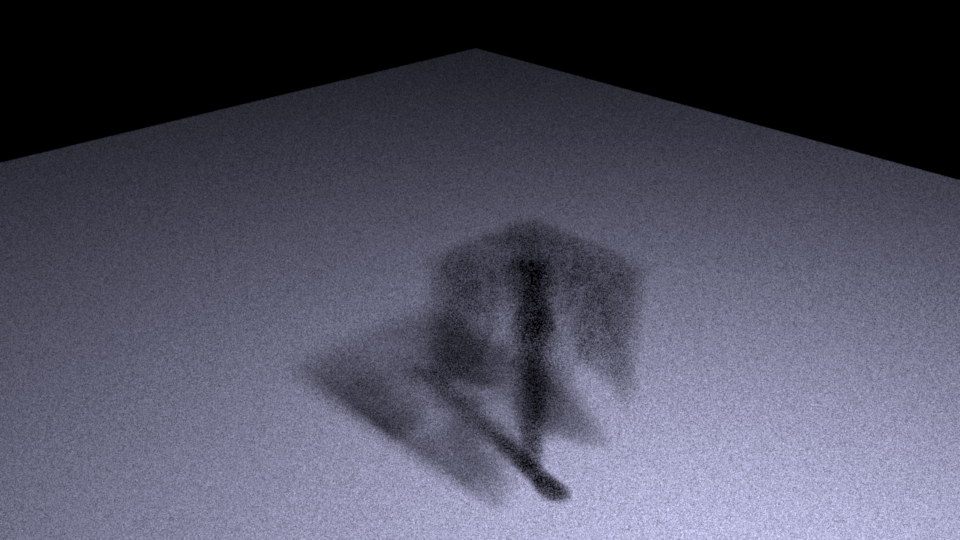

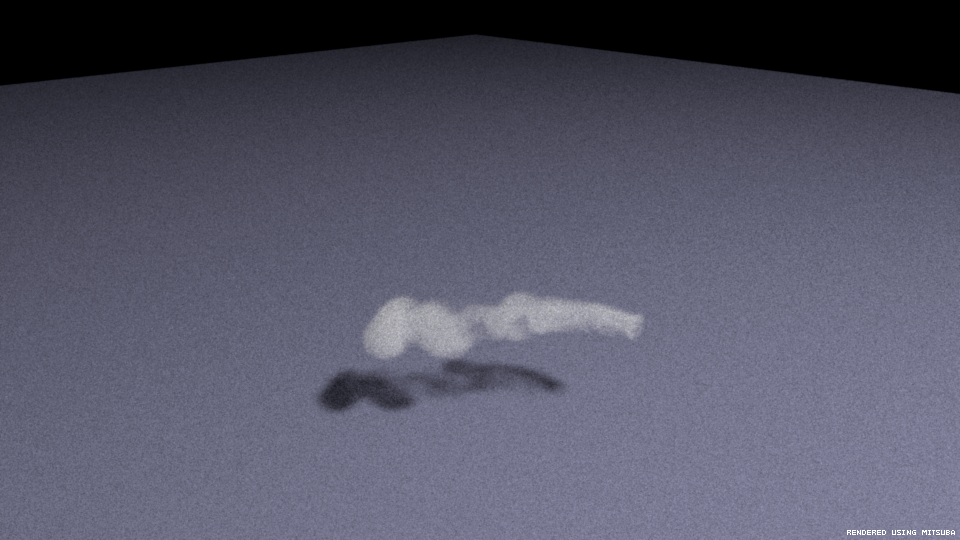

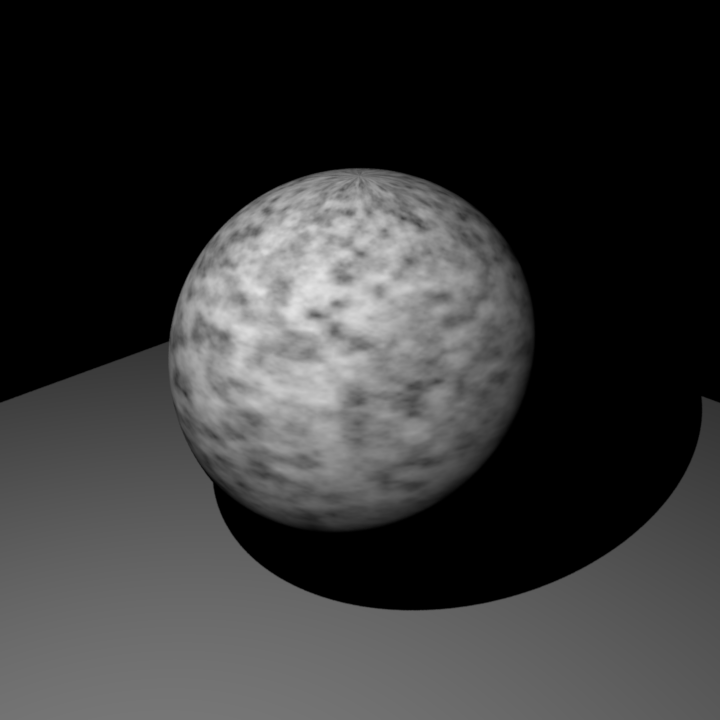

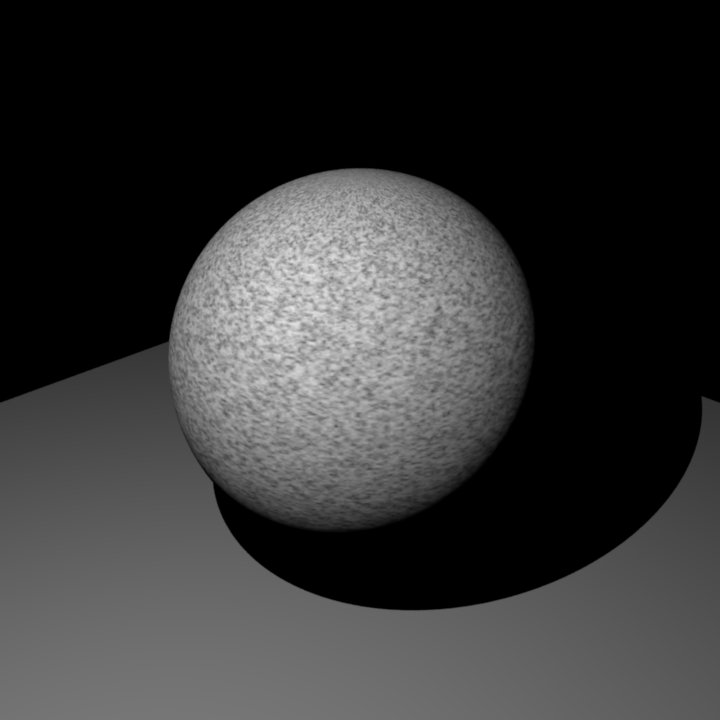

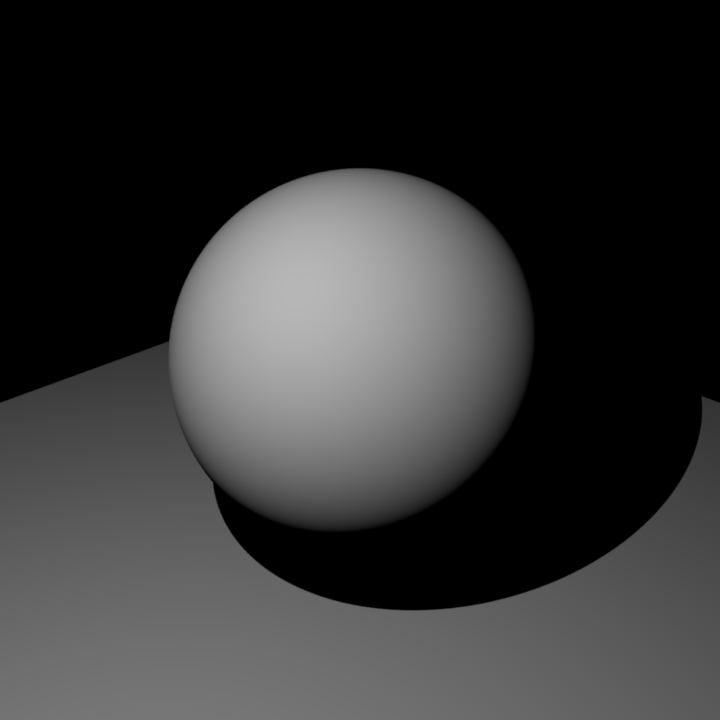

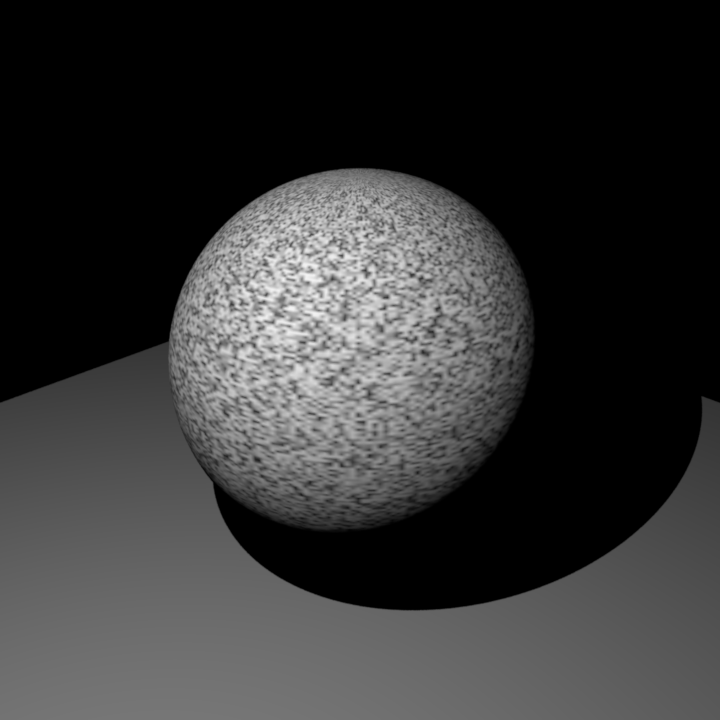

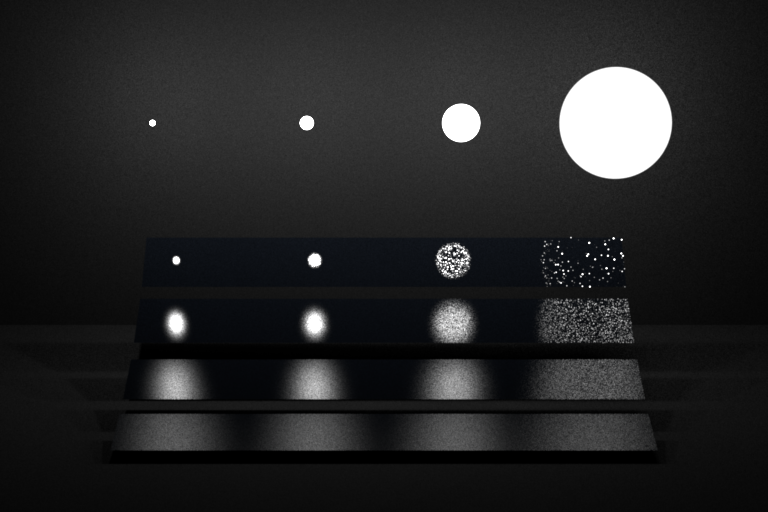

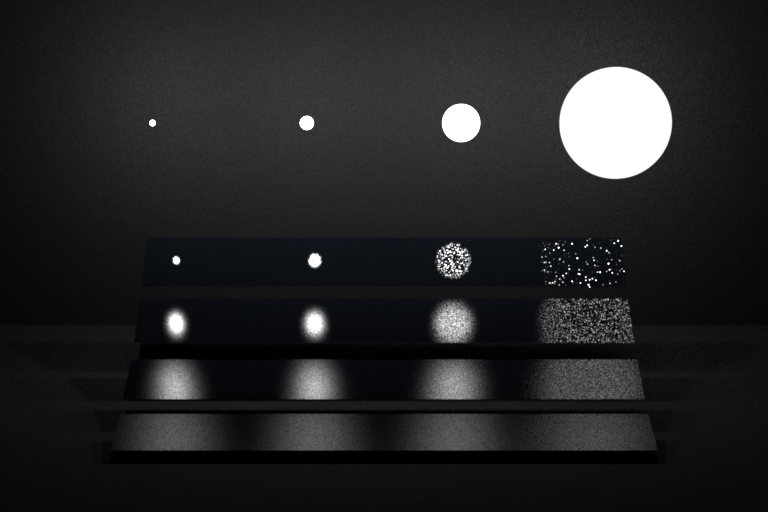

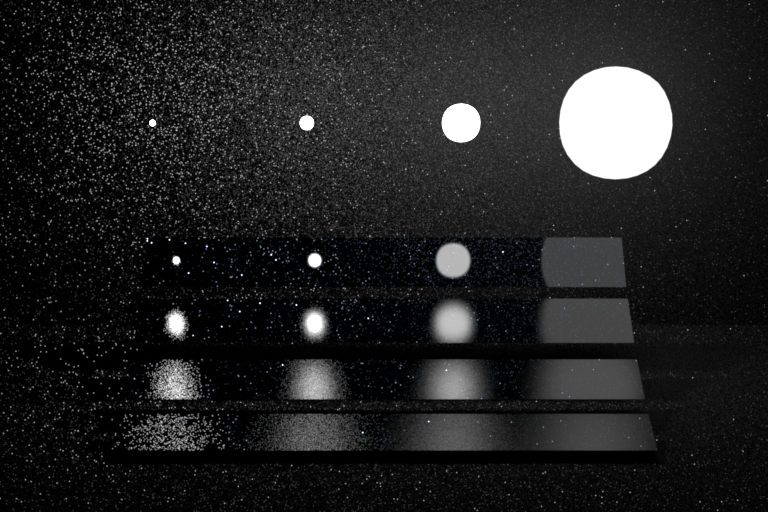

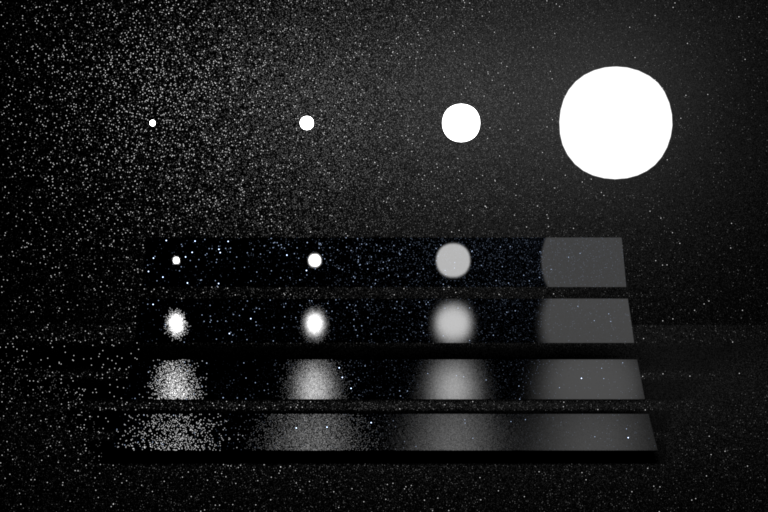

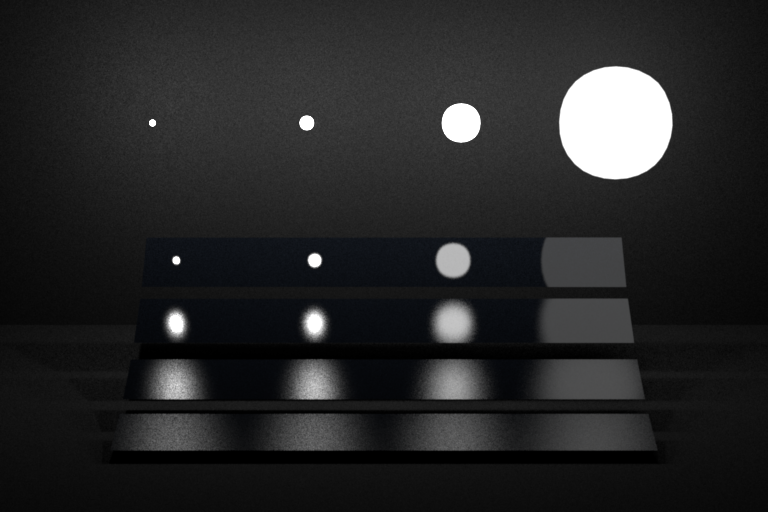

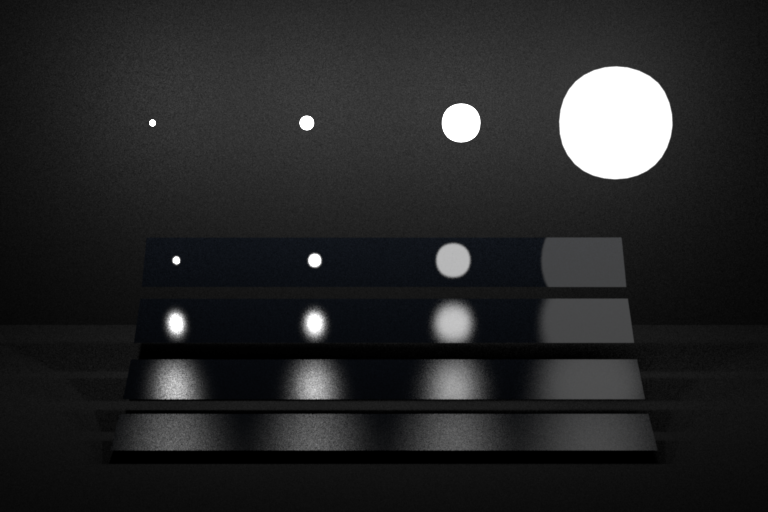

Average Visiblity Integrator

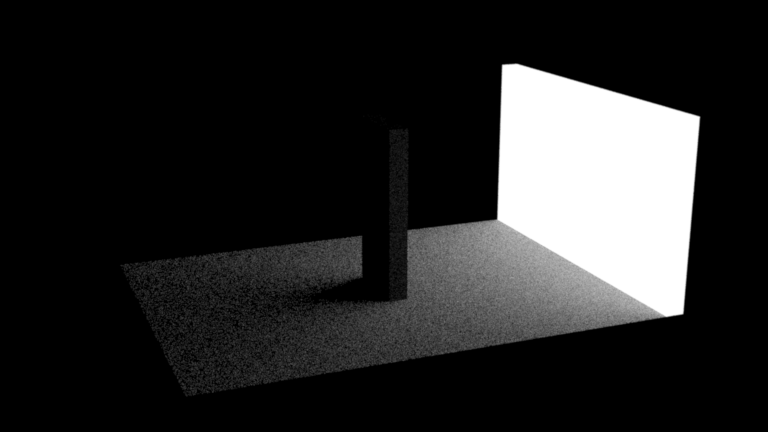

For each point intersected with the a camera ray, we sample random directions of its hemi-sphere (sampleUniformHemisphere) to construct a new ray in the corresponding direction. Under the ambient light case, if there is an intersection between the new ray and the mesh in the scene, the shaded color for this point should be black. This means that the ray is blocked and the pixel is black. Otherwise it should be shaded as the color of the ambient light. Finally, we average all the colors for each pixel's camera rays and return the average color. This task cost a some time when we use 1024 sample rays per pixel.

This feature is also known as ambient occlusion (AO), a method to approximate the global illumination in a scene. One interesting thing is that we can define min_t and max_t for the secondary constructed rays while detecting if the rays are blocked by other objects in the scene. Without doing this, the scene can be rendered as a black image when there is a closed box enclosing all the objects in the scene.

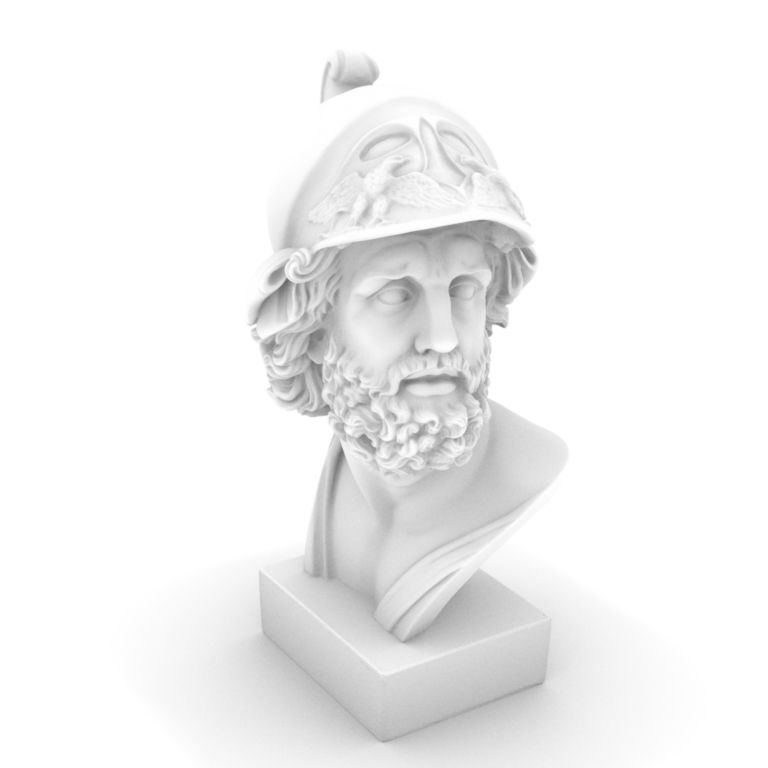

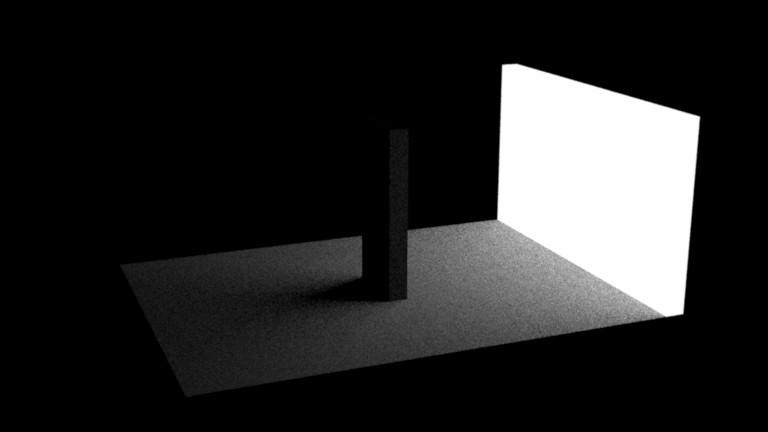

Rendered results of ajax and sponza are shown below.

AV Comparison: ajax

AV Comparison: sponza

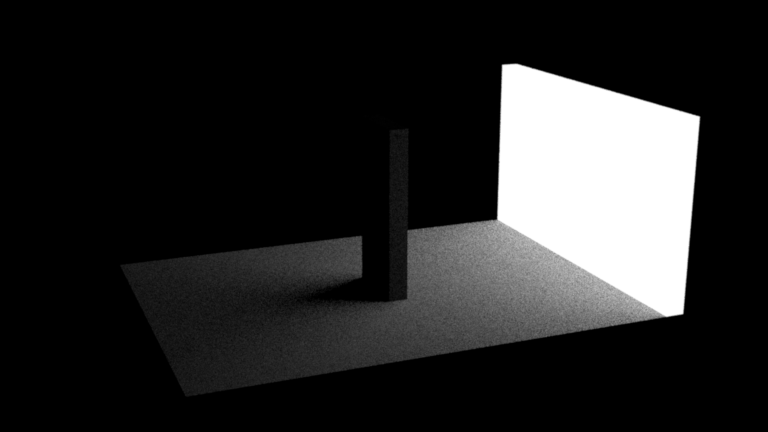

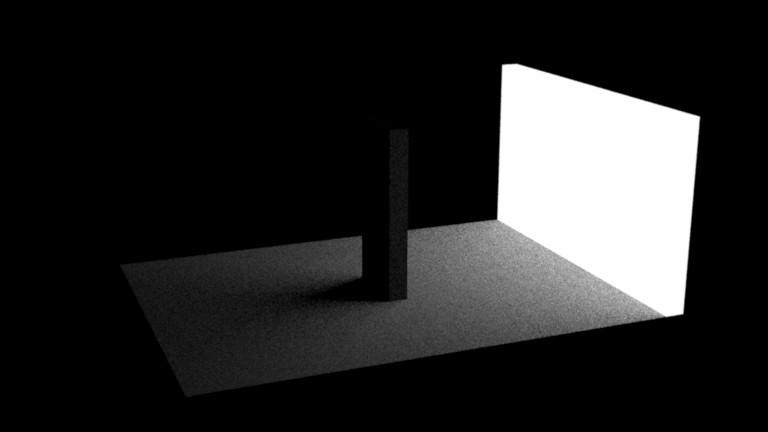

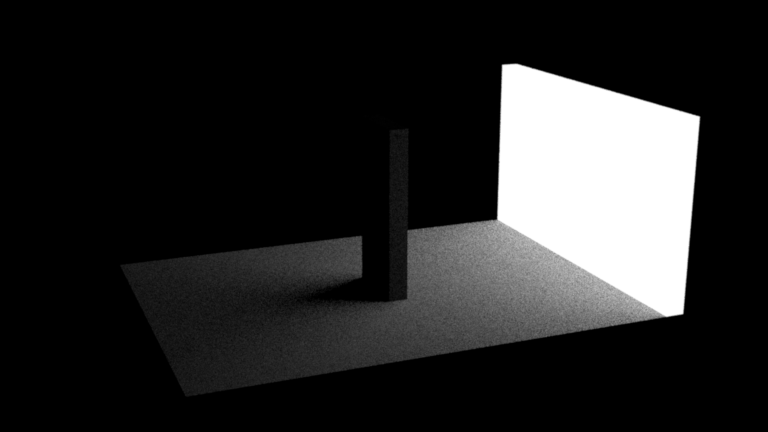

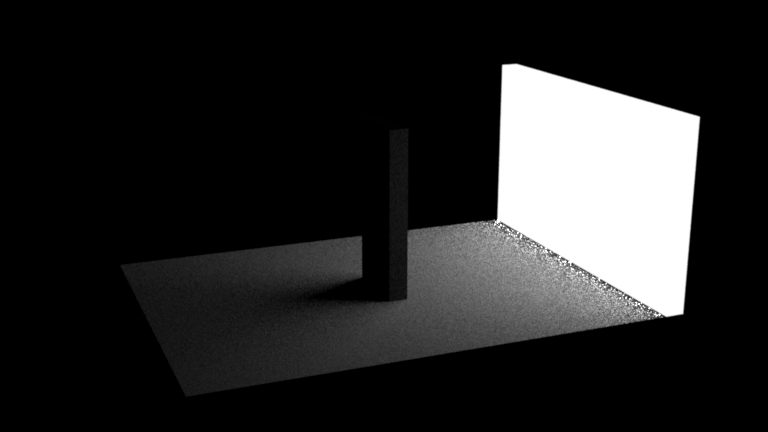

Direct Illumination Integrator

Here we consider implementing the point light class and its member functions. Different from other type of light sources such as area light, point light is considered as a infinitely small point without area. To render a shading point under a point light, the emmiter value is the pre-defined power of the point light divided by 4*PI and the distance from intersection to point light. The calculation of Li is similar to AO, the difference we need to consider is the BSDF value of the intersection point calculated in UV coordinate and the abs cosine angle between shading normal and the ray. More specficially, for each shading point, we sample one of the point light in the scene, as well as samplng BRDF outgoing direction for the intersection tests. The shading value is calculated by integration (sum here) of point light * BSDF * |cos_theta|.

Rendered direct illumination result of sponza is shown below.

DI Comparison: sponza

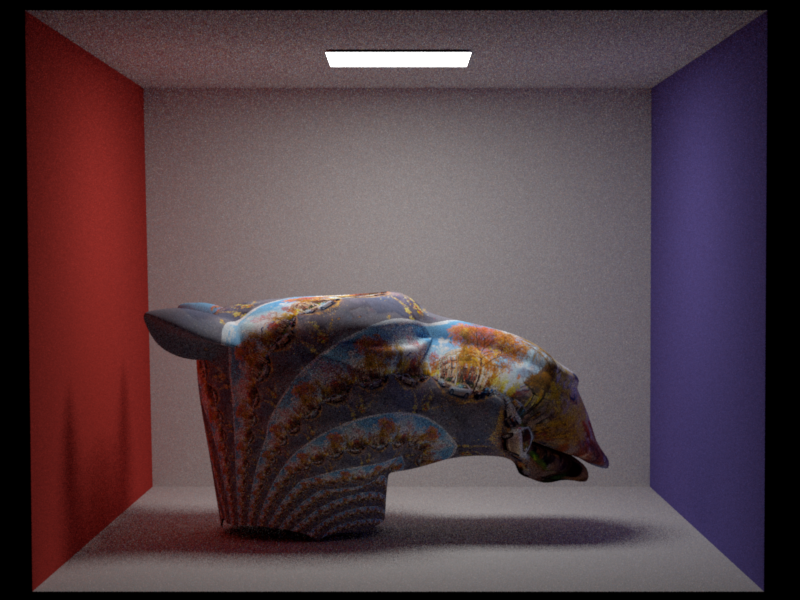

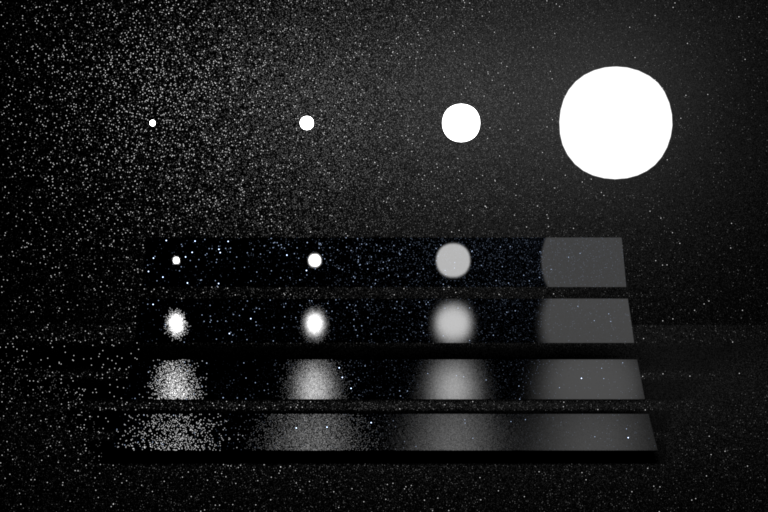

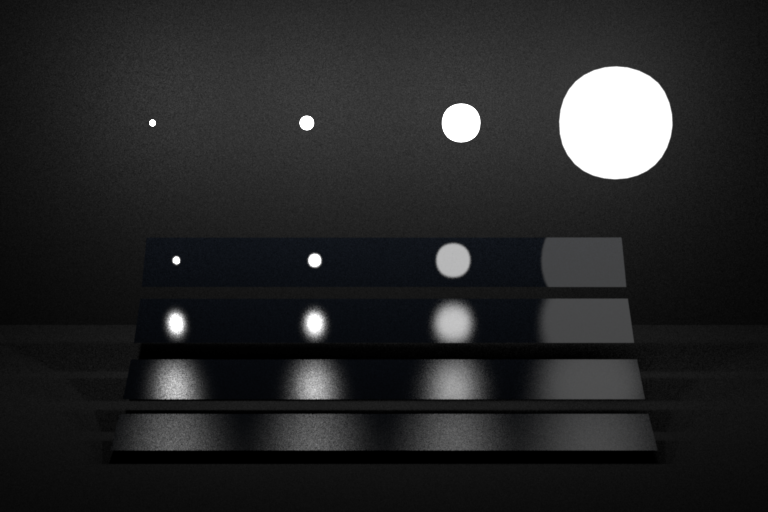

Light Sampling

In the following, we implement different sampling methods for rendering, including BRDF sampling, area light sampling and multiple importance sampling (MIS). First is light sampling.

Integrator Implementation

We note direct_ems as the integrator for sampling area light. For each primary camera ray, we sample a point on the light source, then construct the information in EmitterQueryRecord. Beides, for this primary intersection point, we also evaluate the BRDF value according to given information (wi, wo, etc.). Then we simply calculate all the terms needed in the rendering equation (also with cos(theta_o)).

Shape Area Light

There are 3 functions for implement a light source: eval() is for getting sampled value radiance, and pdf() for getting the sampled pdf. Notice that pdf should consider both solid angle conversion and distance from light source to shading point. This is based on change of variables in the integral equation. Then the sample function is to fill the EmitterQueryRecord and sample a secondary shadow ray given the primary camera ray. Lastly, the corresponding value is evaluated by eval/pdf.

Validation

Here are the results for sampling light source in the odyssey and veach scene:

odyssey_ems

veach_ems

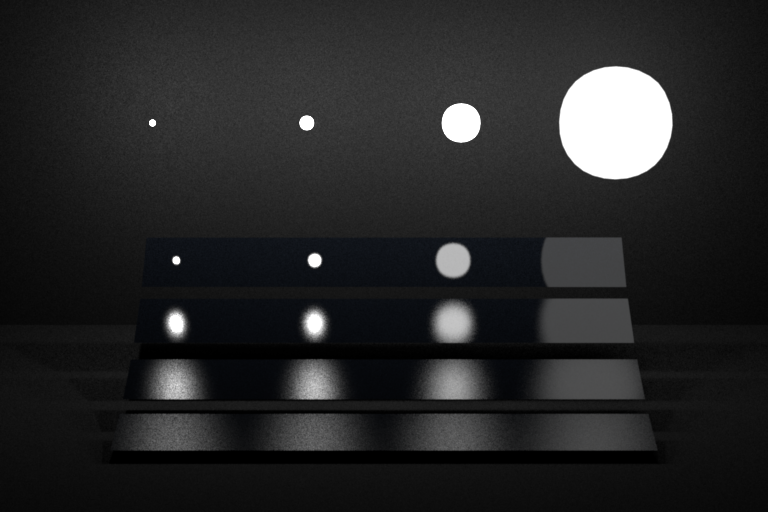

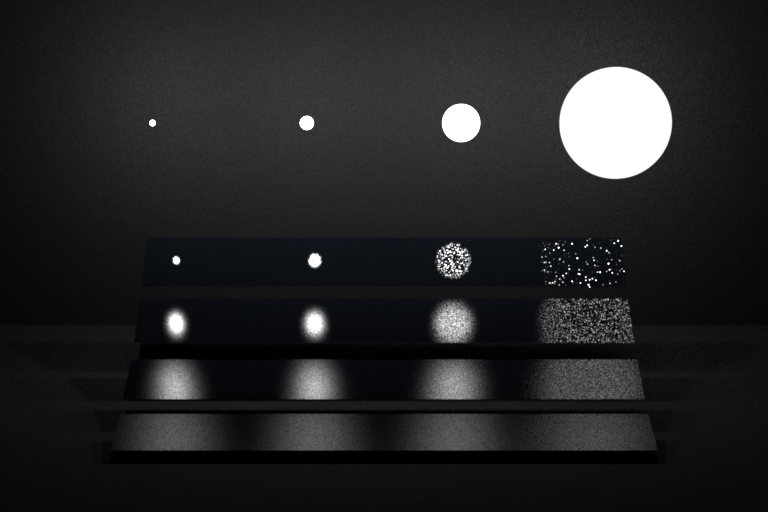

BRDF Sampling

BRDF sampling is another sampling technique. Instead of sampling a light source, we sample the bsdf's normal wh, direction wi and the corresponding values. (TODO: refactor details related to solid angle and microfacet BRDF)

Integrator Implementation

We note "direct_mats" as the BRDF sampling integrator, including sampling bsdf and evaluate the light radiance of the outgoing directions for each shading point. First we initialize a BsdfQueryRecord given wo (wi in nori), and then we sample bsdf normal then return the BRDF value. It will be multiplied by evaluated light radiance later.

Microfacet BRDF

Validation

Here are the results of BRDF sampling for odyssey and veach scenees, with also warptests:

odyssey_mats

veach_mats

Part 3: Multiple Importance Sampling

MIS combines the advantages of both BRDF and light sampling.

Integrator Implementation

Here we combine the light sampling and the BRDF sampling implementation. (TODO: refactor details).

Validation

Here are the results from MIS for the odyssey and veach scenes, with screenshots of passed tests:

odyssey_mis

veach_mis

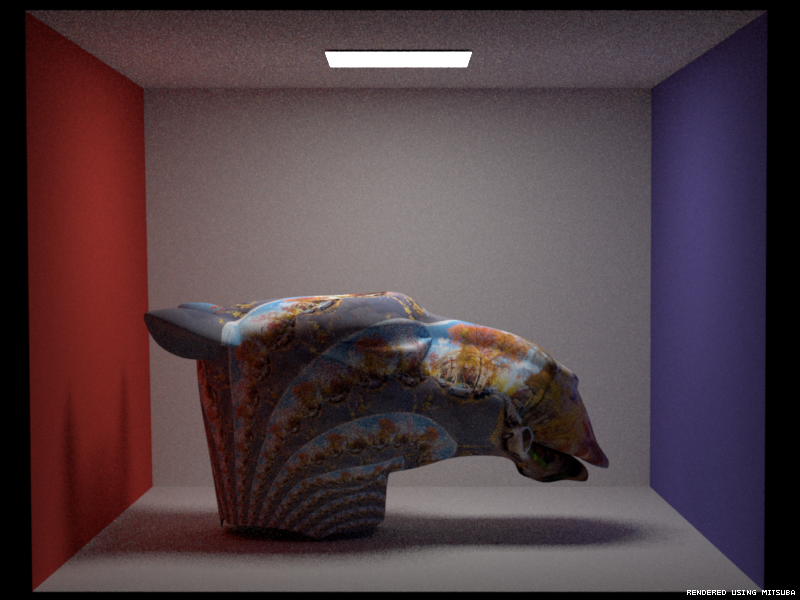

Image Validation

Two 4-way comparisons for each of the 2 scenes including direct_ems, direct_mats, direct_mis, together with the reference MIS rendering.

Path Tracing

path_mats Implementation

By adding a while loop and russianRoulette, we turn direct_mats into path_mats. We use russianRoulette with successProb = min(t, 0.99) .

path_mis

This is more trickly to implement. More details can be found in the code.

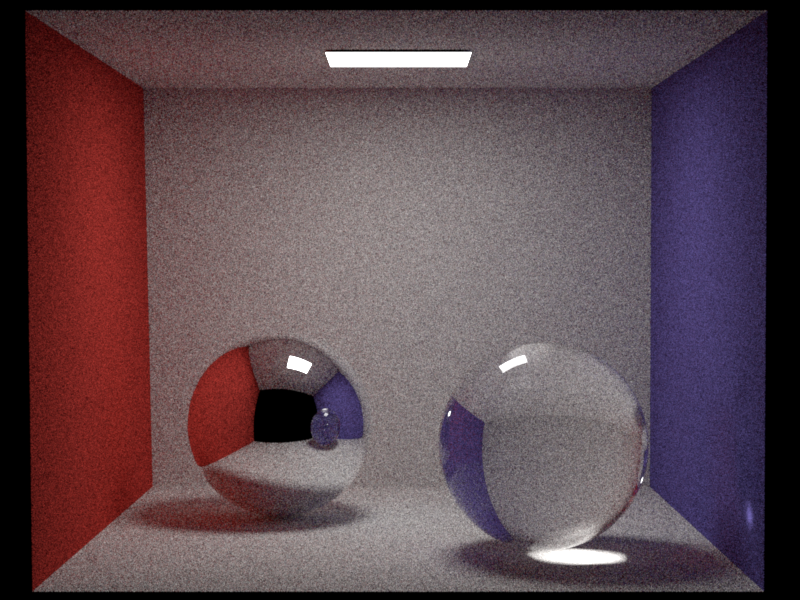

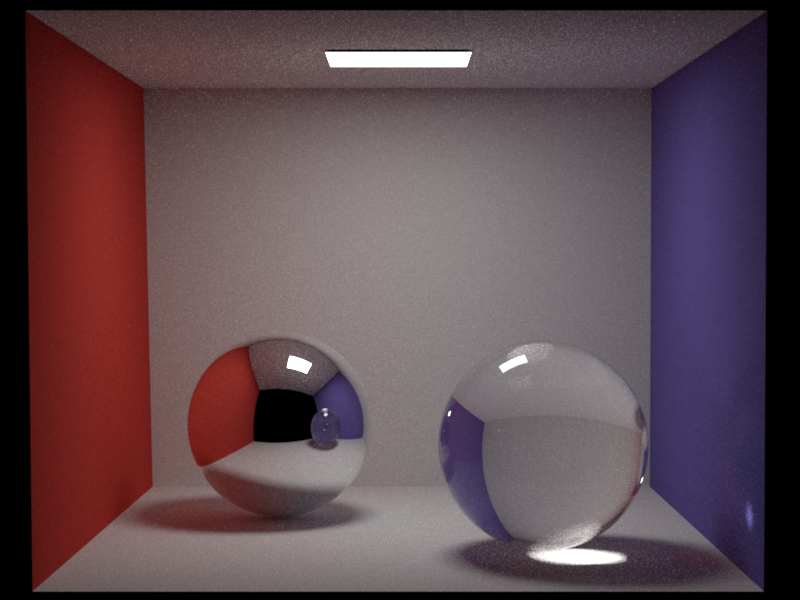

Validation

Here are two screenshots passing 2 tests. Then comes 3-way comparisons on 3 scenes:

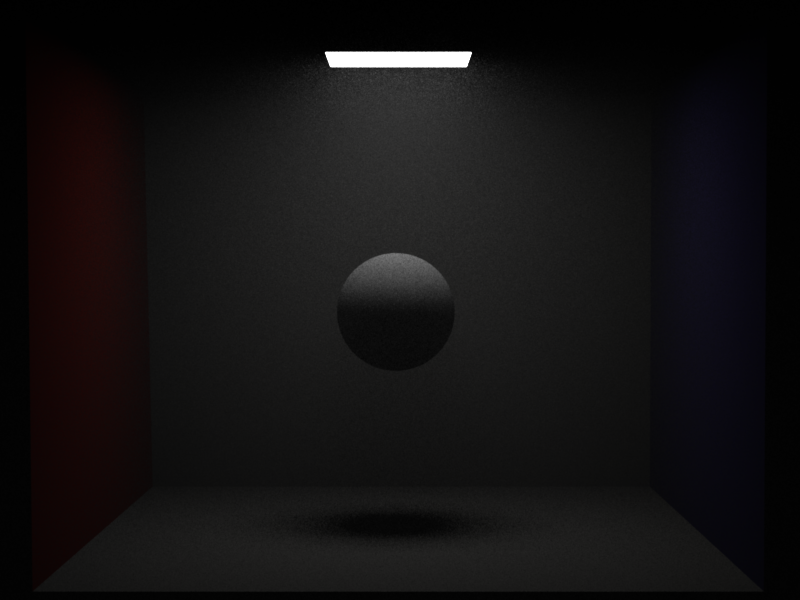

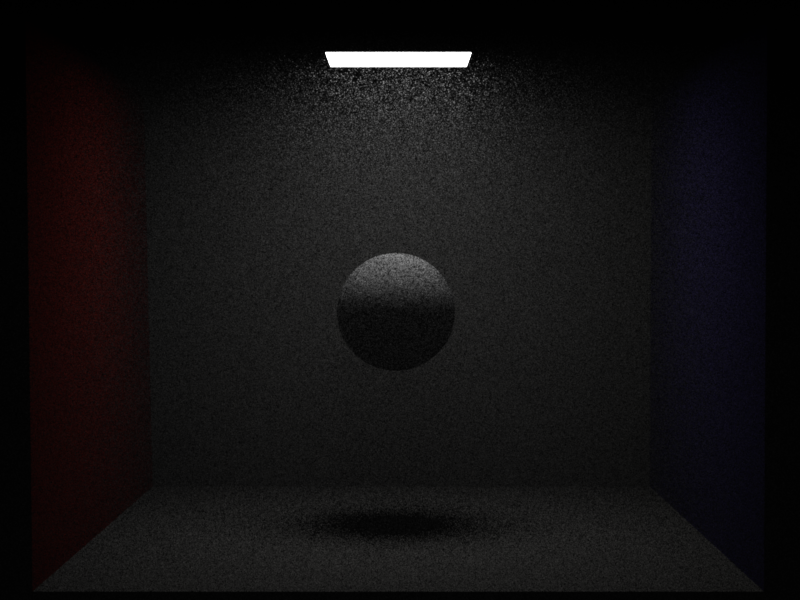

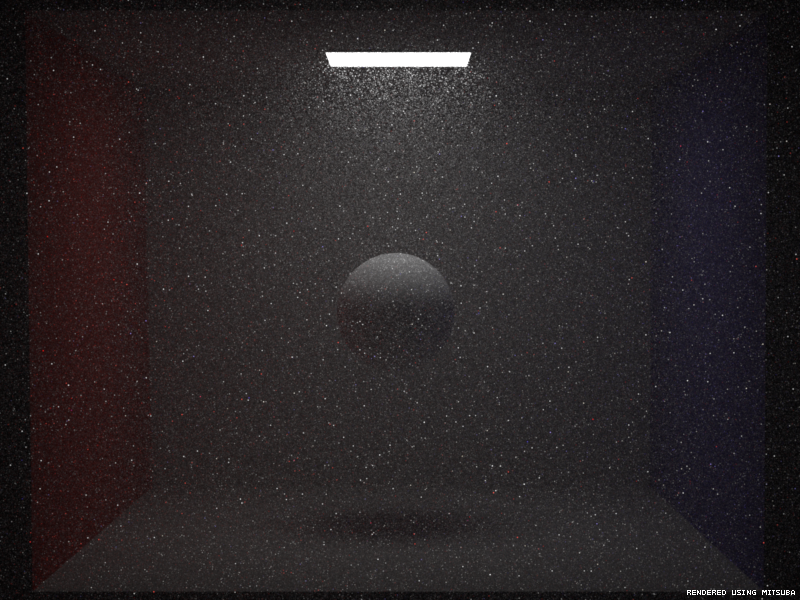

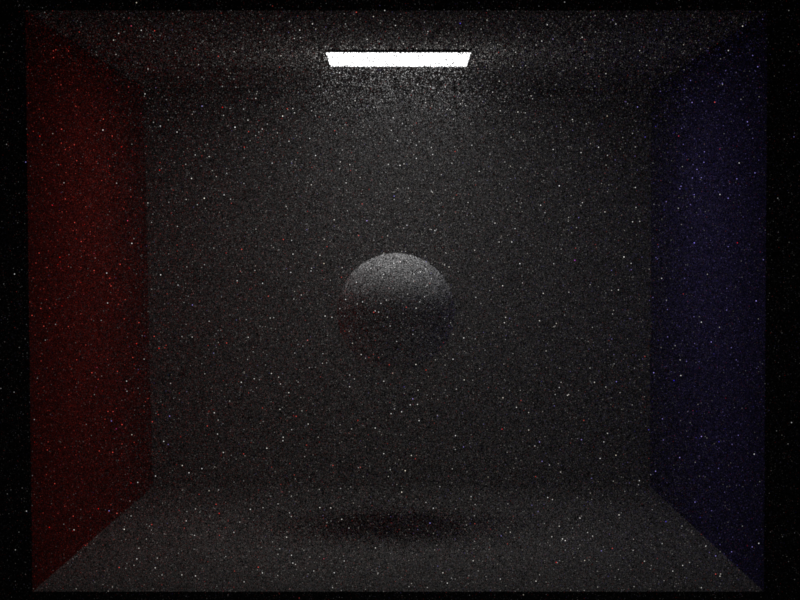

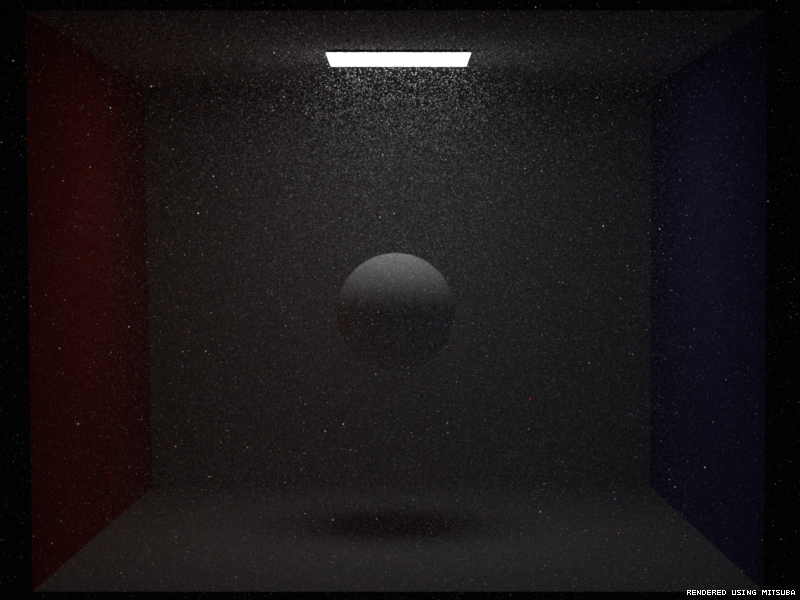

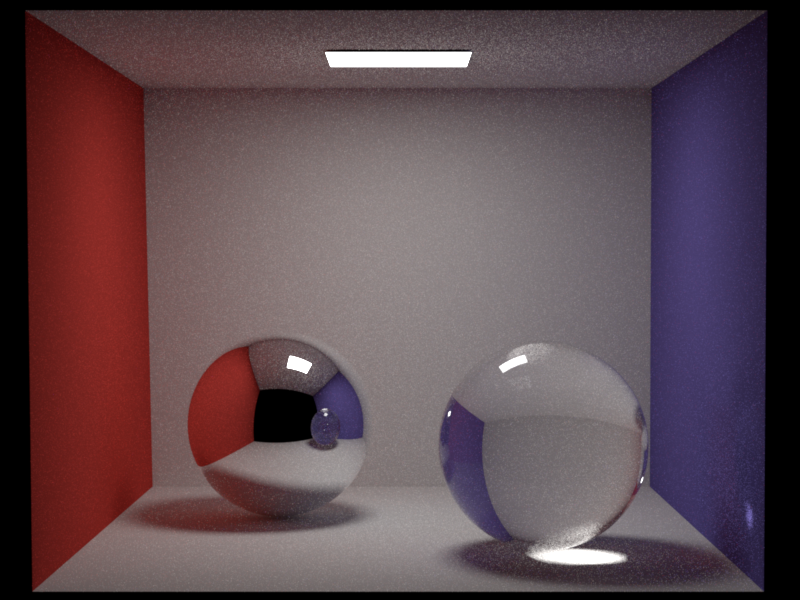

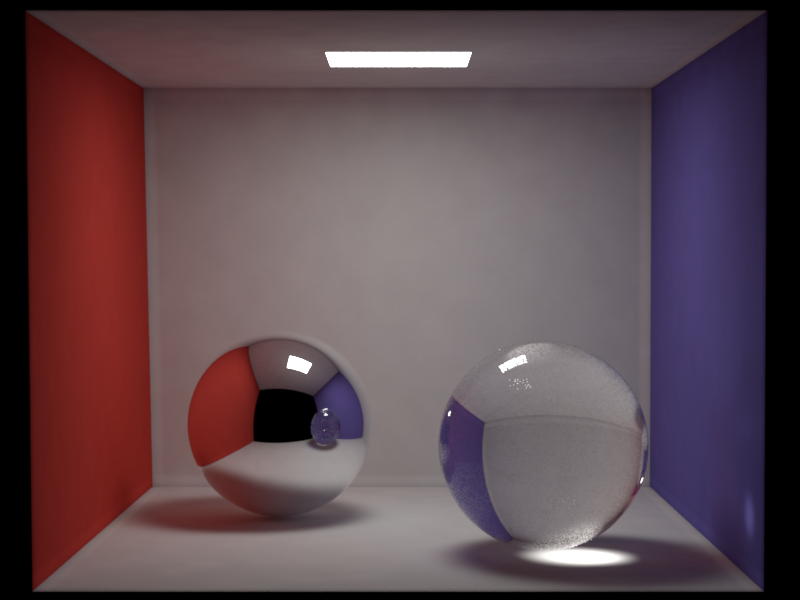

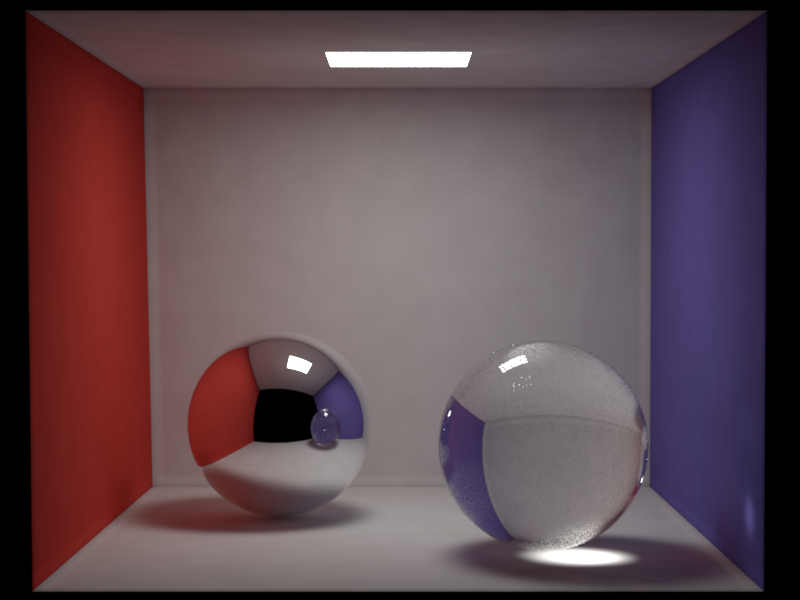

Cornell box scene

Table scene

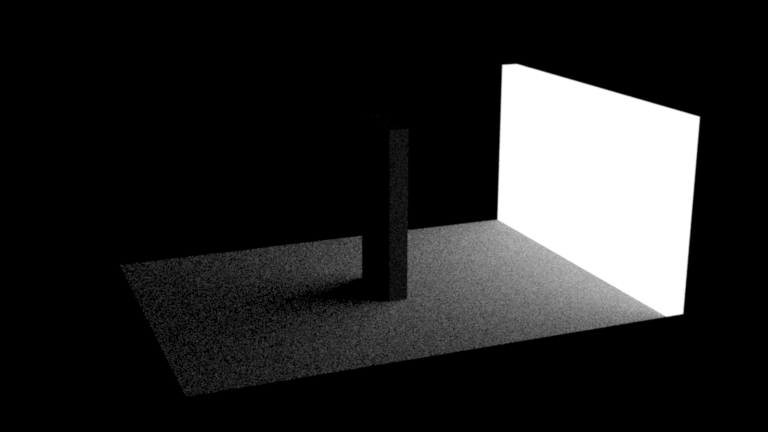

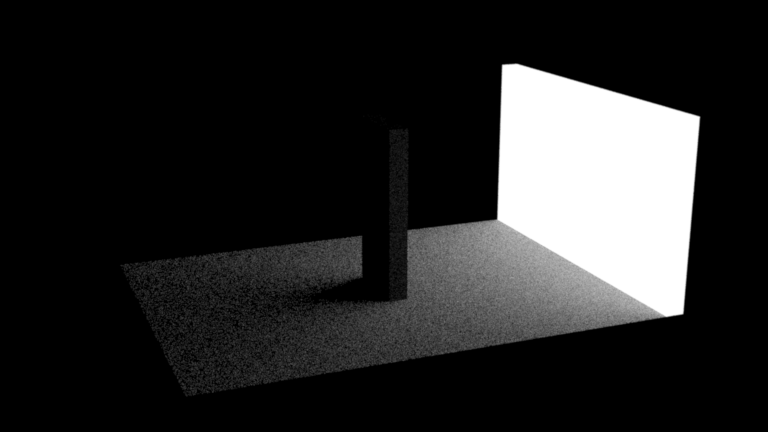

Photon Mapping

Overall, Photon Mapping is method good for rendering caustics, but usually slower than path tracing. This method is biased, and can introduce blocky artifacts when the number of photons is not large enough.

Implementation

Here we use two path tracing steps. Firstly, we emit photons from emitter in a while loop, and each photon will be stored for diffuse surface and sample new light ray until stopped by russianRoulette. After that, we do path tracing from the camera, and estimate the photon density on the diffuse surface. For delta BRDF, we simply use the same method as path_mats.

Validation

Here are three 2-way comparisons for all of the scenes: there are some differences on the backgroud because of randomness and minor difference between dielectric material.

Cornell box scene

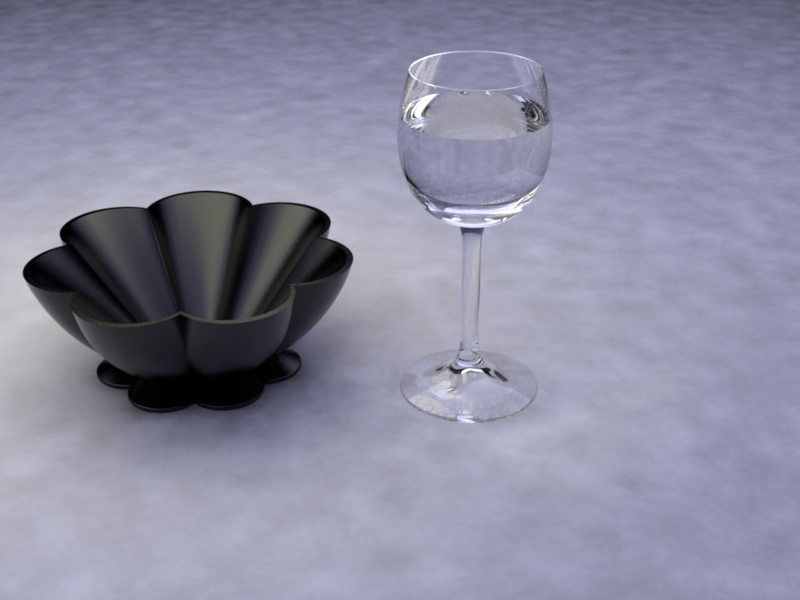

Table scene

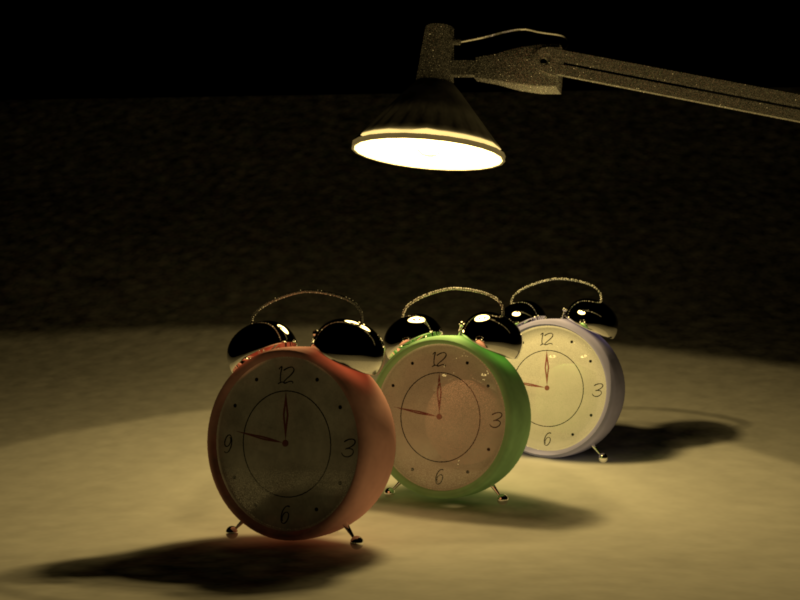

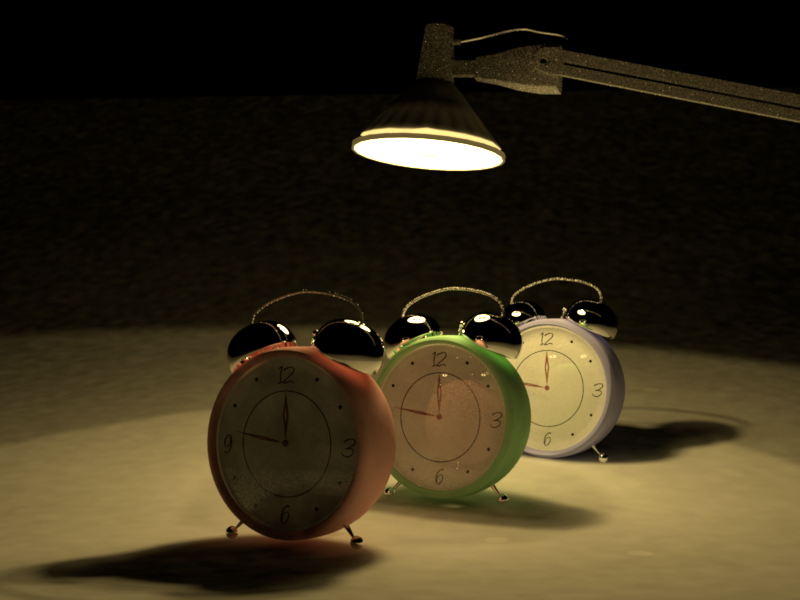

Clocks scene