|

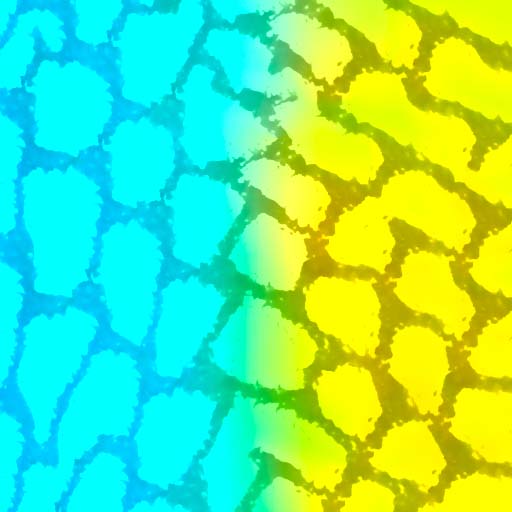

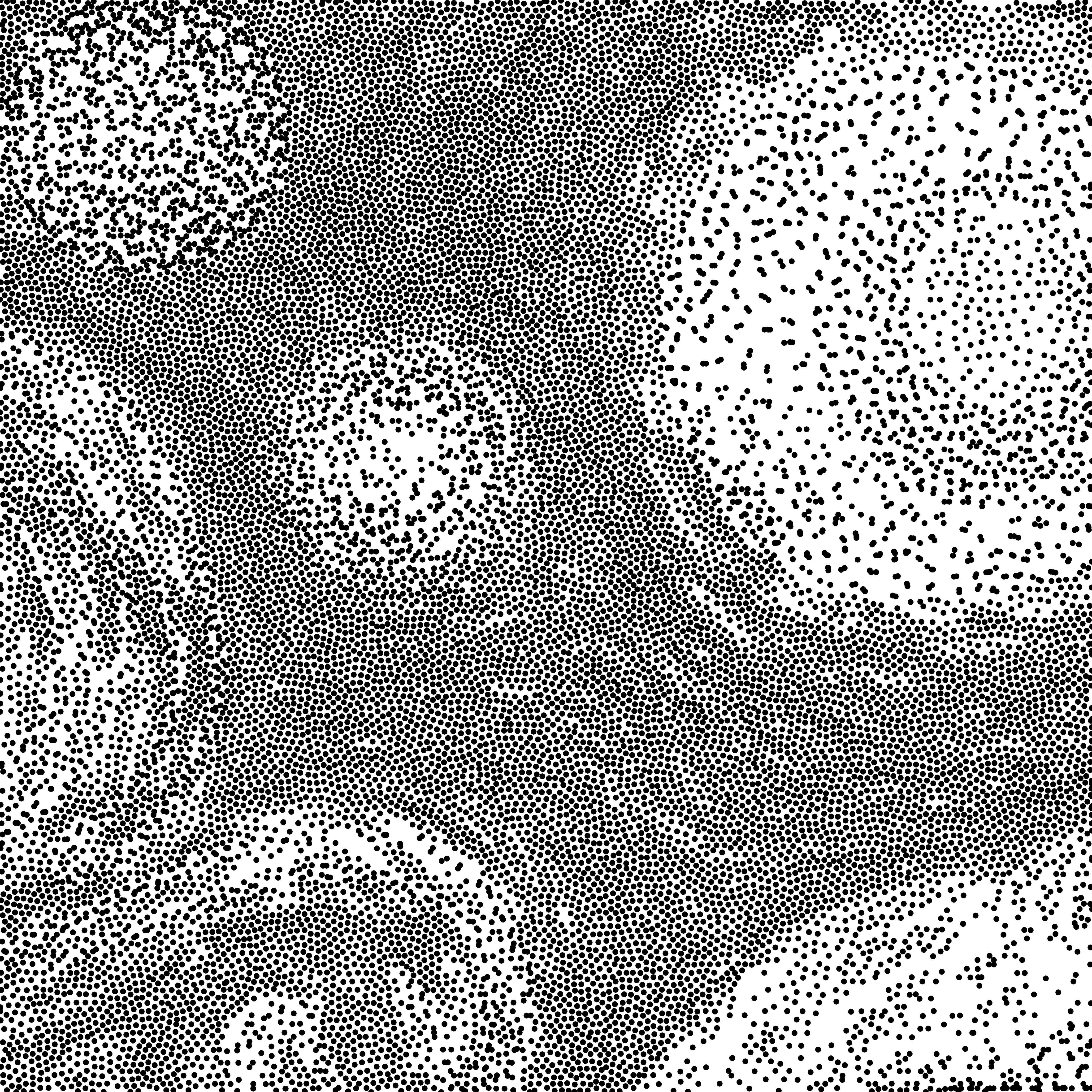

Edited input density and correlation (LAB)

|

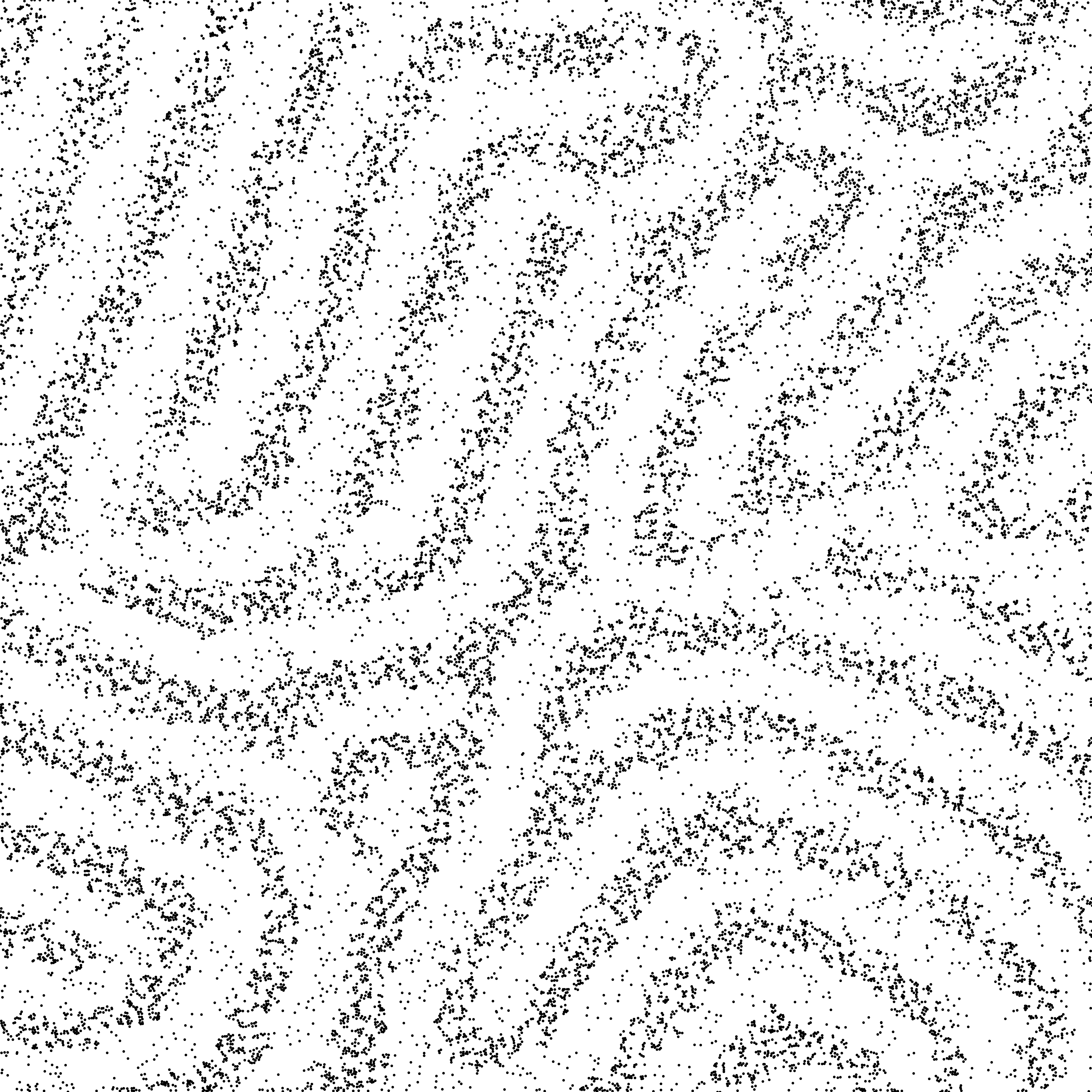

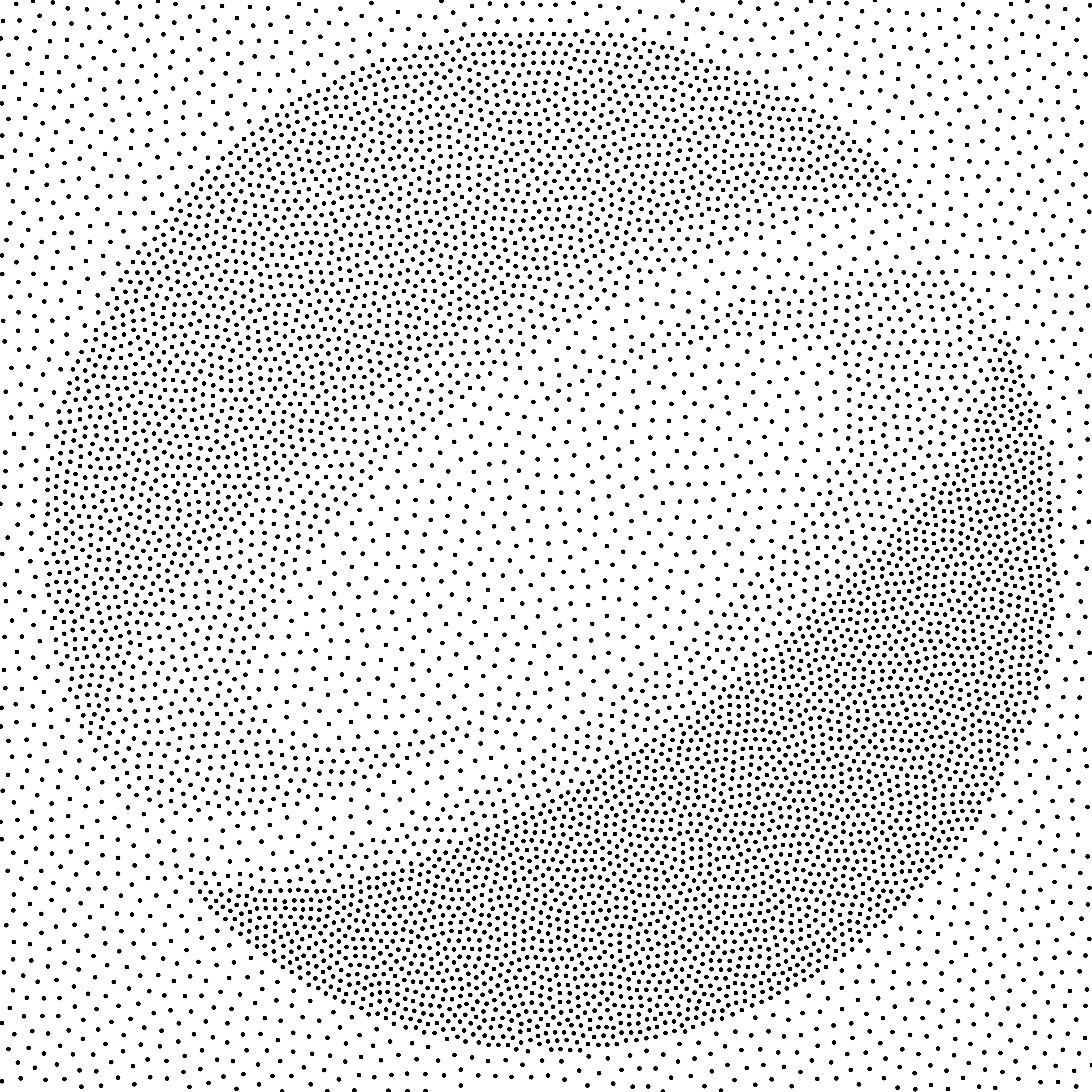

Edited input density (L)

|

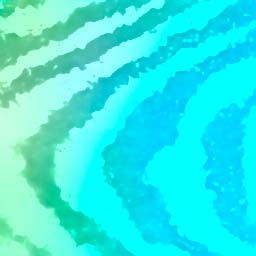

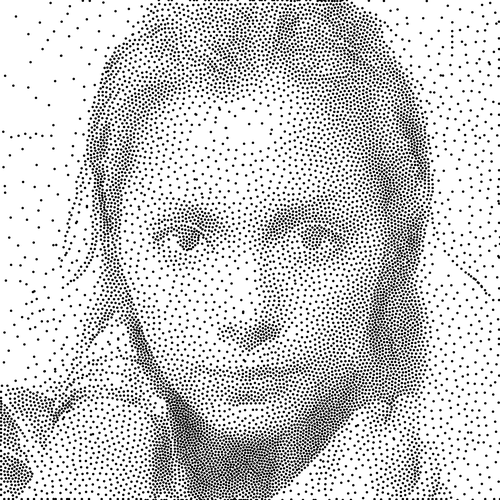

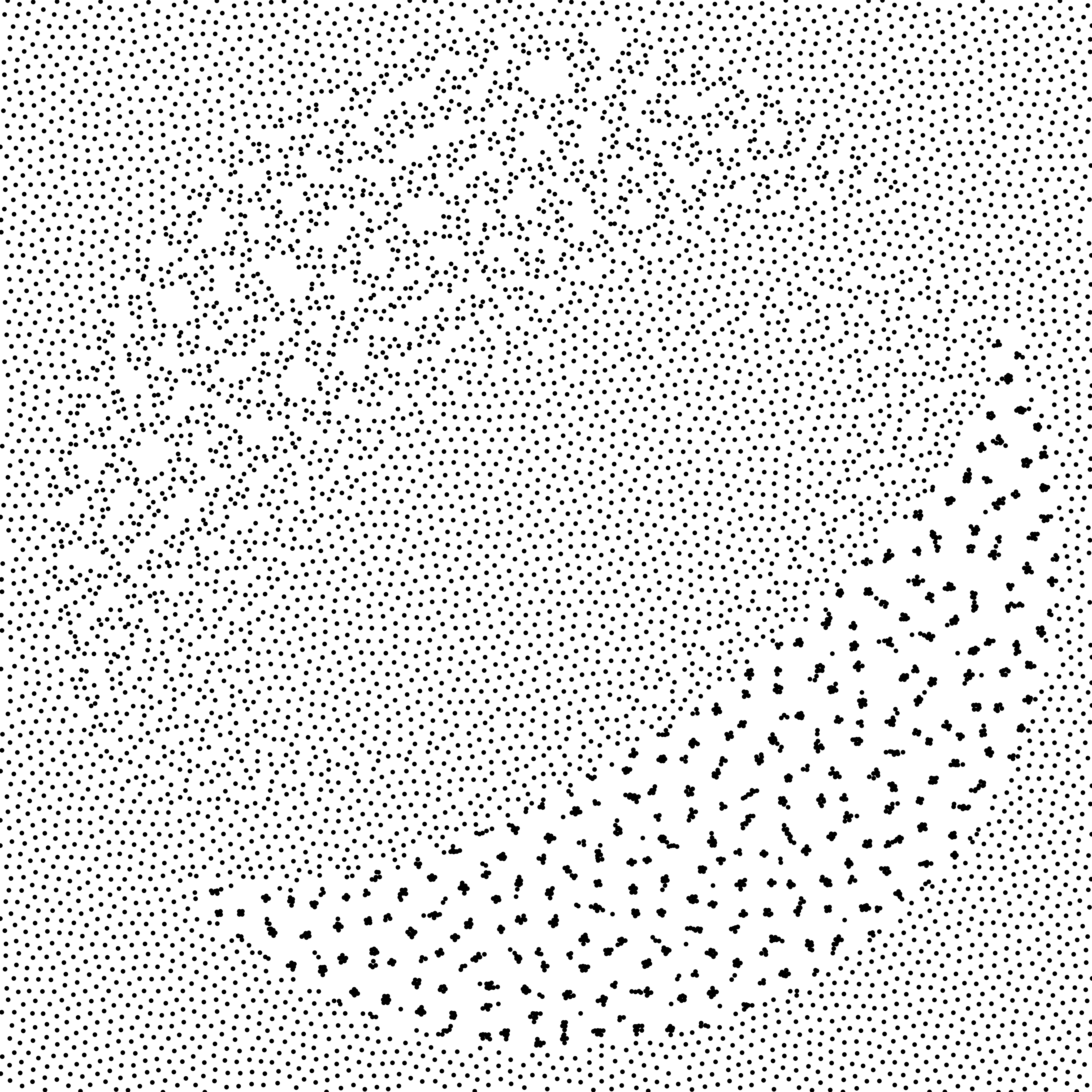

Edited input correlation (AB)

|

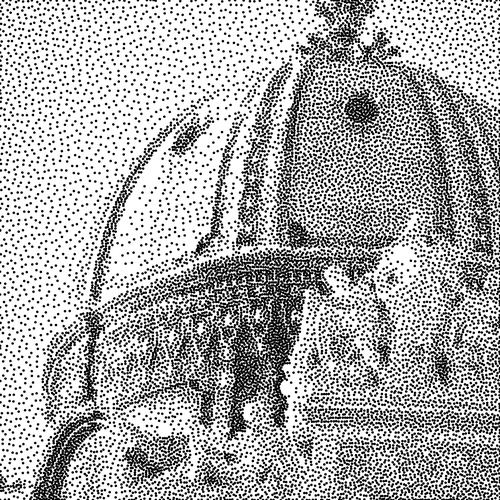

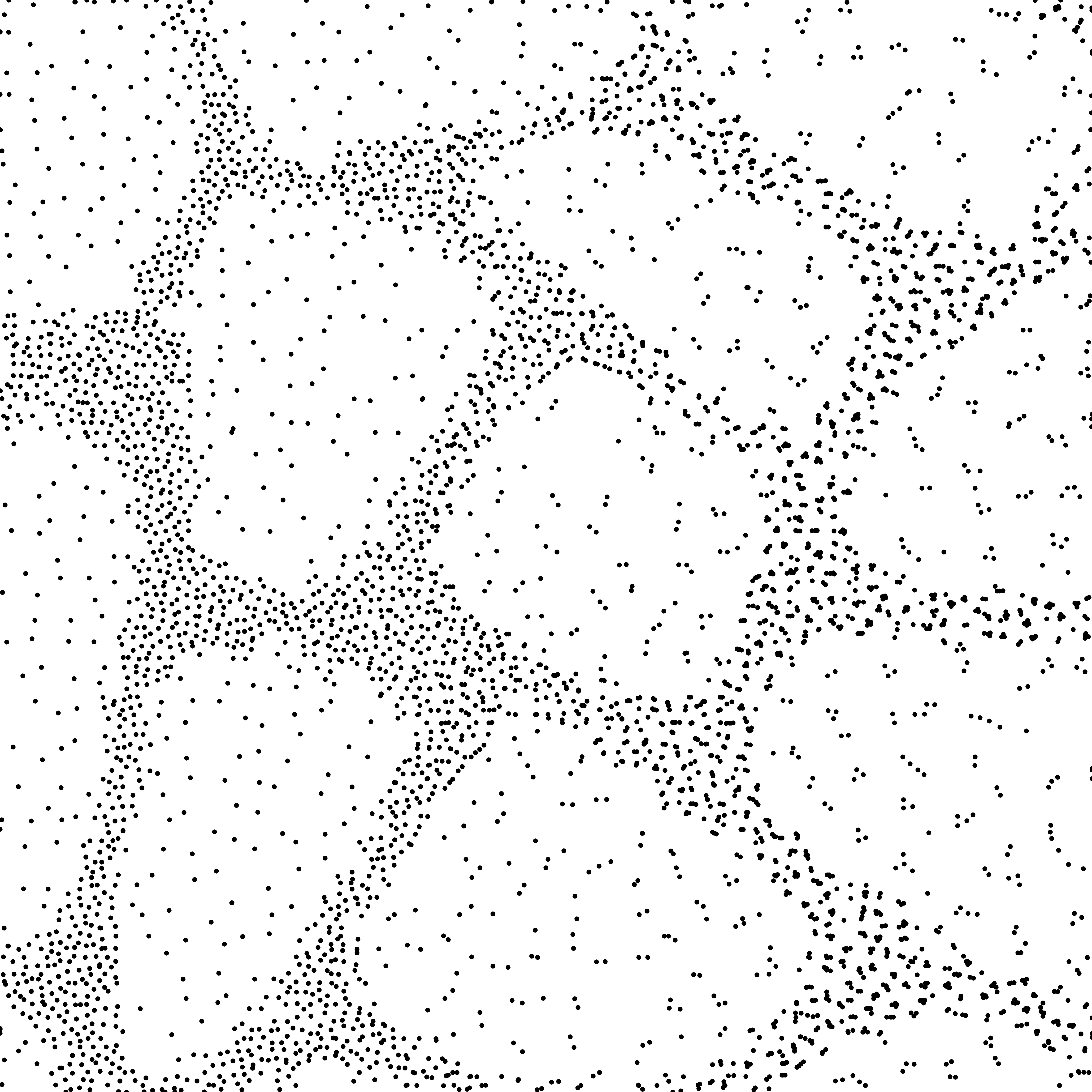

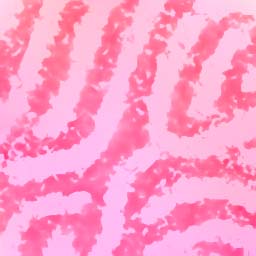

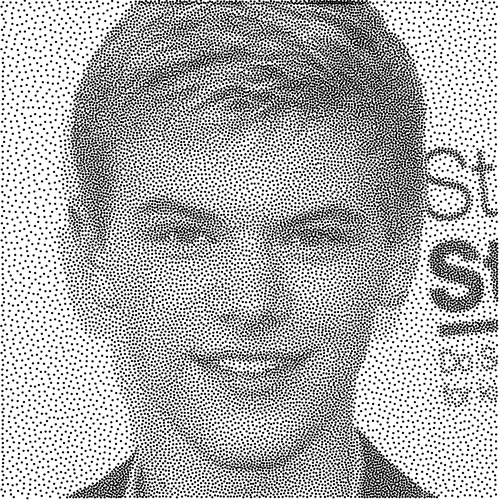

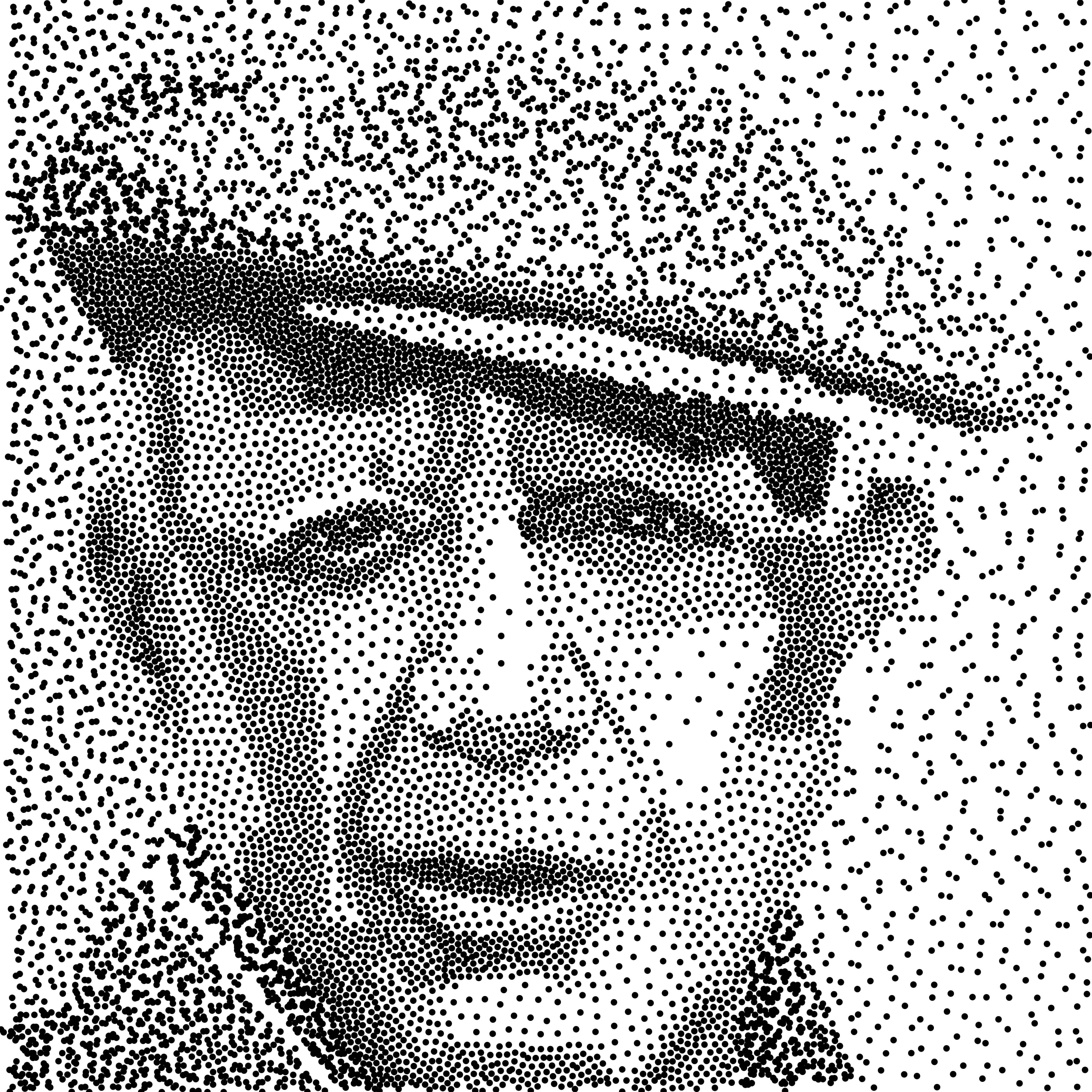

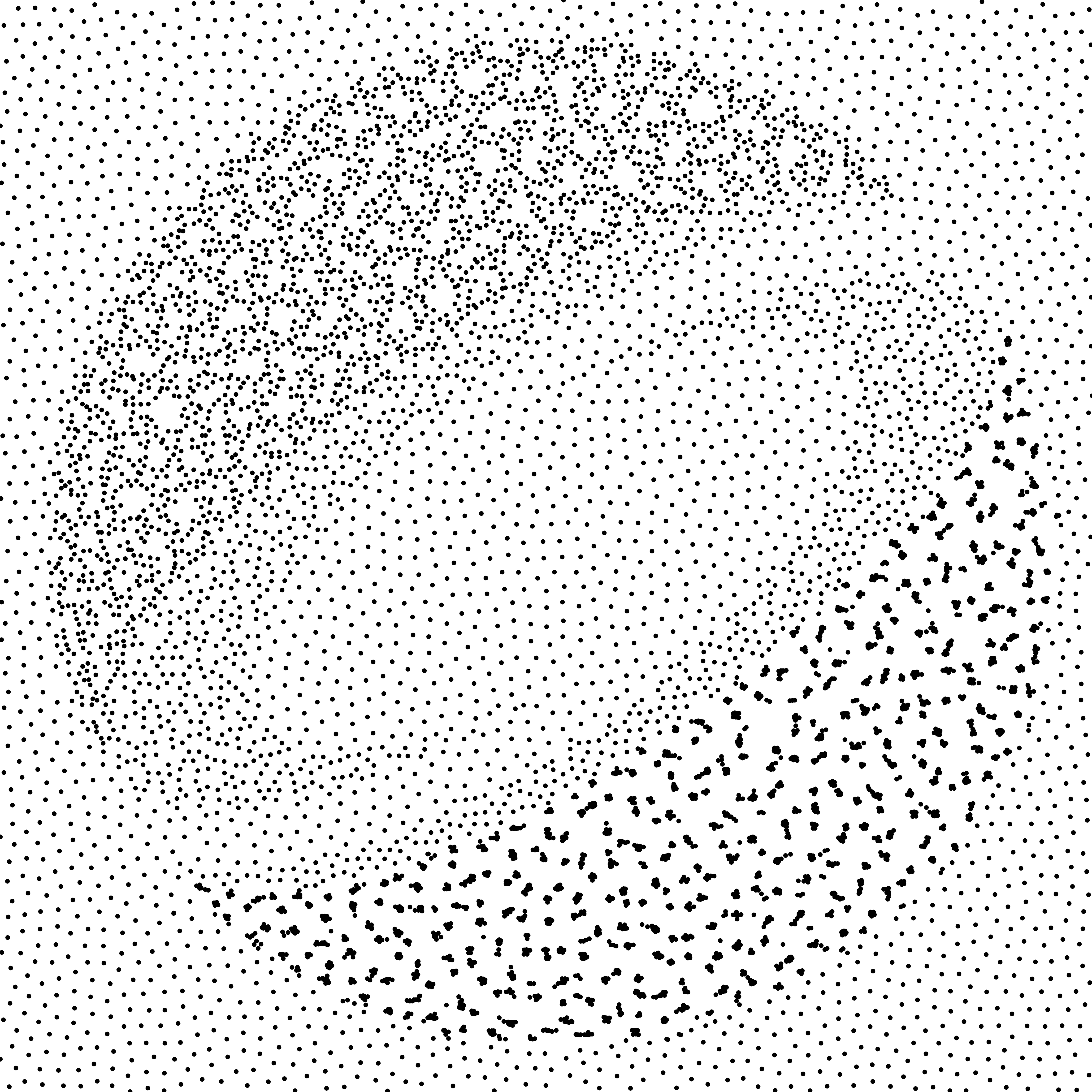

Edited output point pattern

|

|

Edited input density and correlation (LAB)

|

Edited input density (L)

|

Edited input correlation (AB)

|

Edited output point pattern

|

|

Edited input density and correlation (LAB)

|

Edited input density (L)

|

Edited input correlation (AB)

|

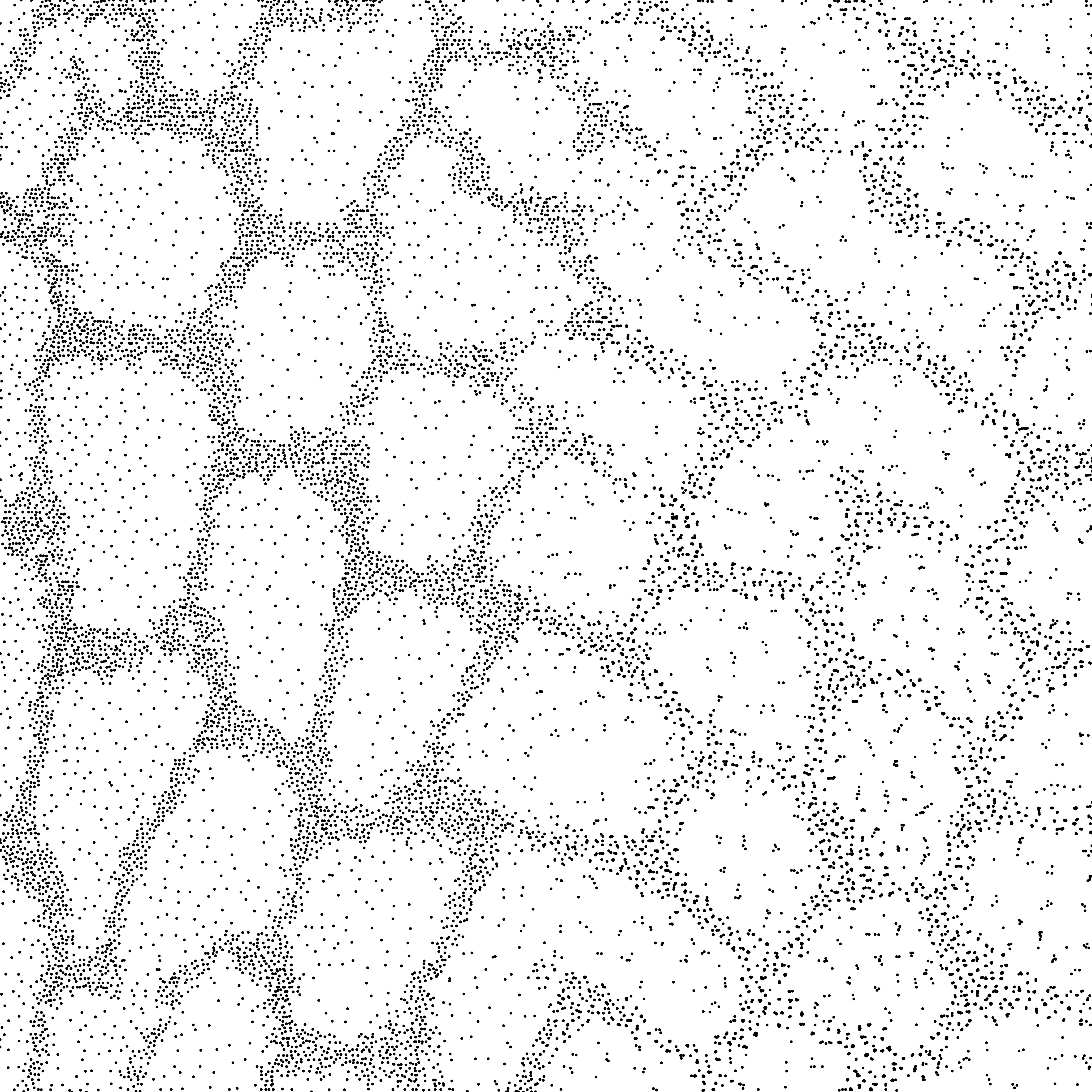

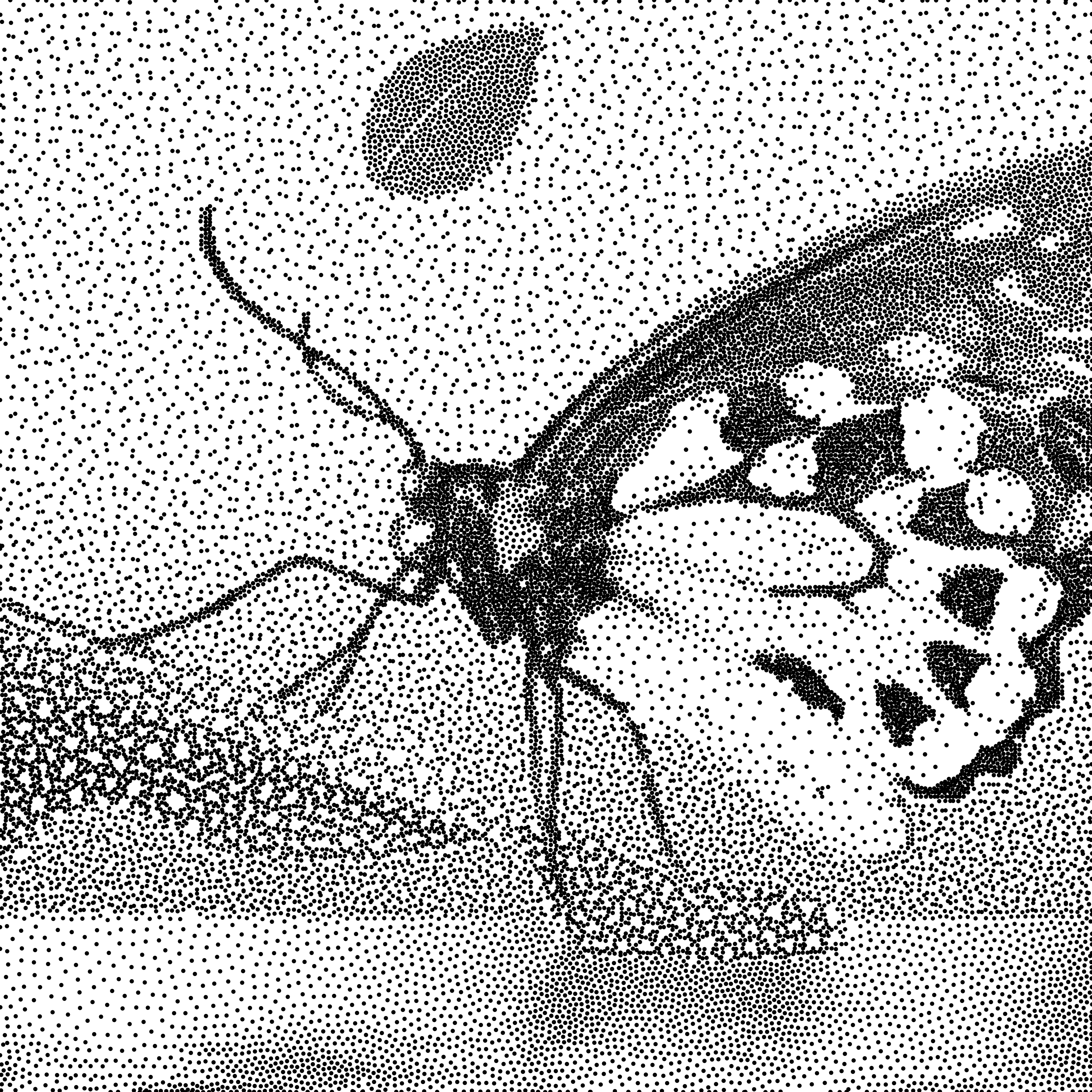

Edited output point pattern

|

|

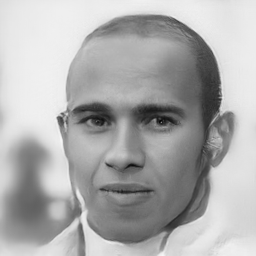

Edited input density and correlation (LAB)

|

Edited input density (L)

|

Edited input correlation (AB)

|

Edited output point pattern

|

|

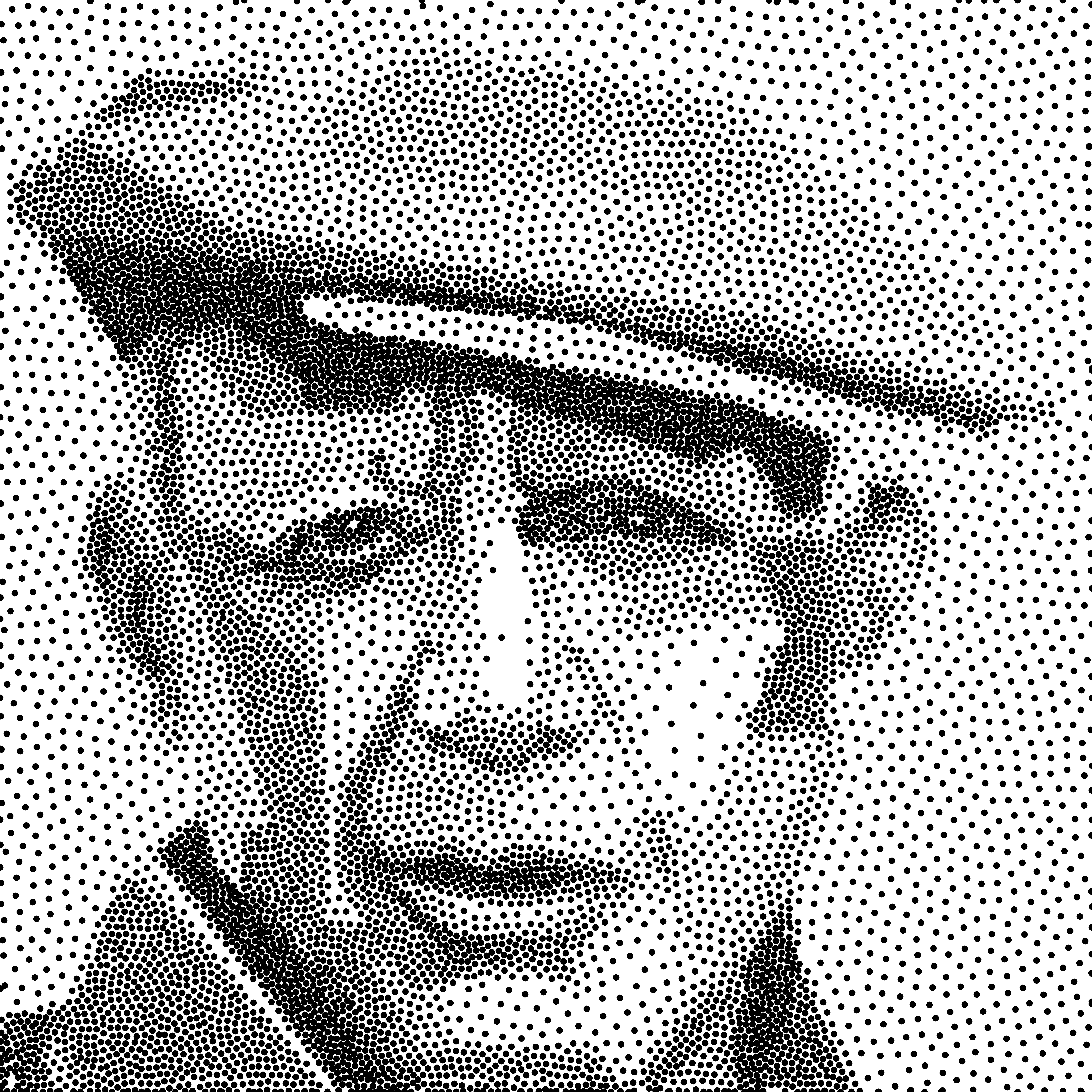

Edited input density and correlation (LAB)

|

Edited input density (L)

|

Edited input correlation (AB)

|

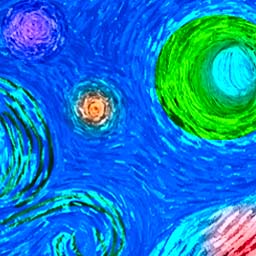

Edited output point pattern

|

|

Edited input density and correlation (LAB)

|

Edited input density (L)

|

Edited input correlation (AB)

|

Edited output point pattern

|

|

Edited input density and correlation (LAB)

|

Edited input density (L)

|

Edited input correlation (AB)

|

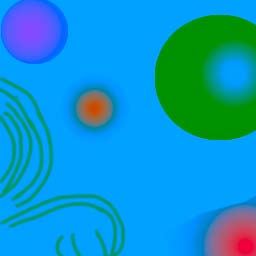

Edited output point pattern

|

|

Edited input density and correlation (LAB)

|

Edited input density (L)

|

Edited input correlation (AB)

|

Edited output point pattern

|